Monitoring Survey - Effectiveness

In the last posts I talked about monitoring environments and check implementation, metrics, the primary tool people used in monitoring and the demographics of the survey.

In this post I am going to look at the questions around the effectiveness of monitoring, how people handle alerting and the use of configuration management software.

As I’ve mentioned in previous posts, the survey got 1,016 responses of which 866 were complete and my analysis only includes complete responses.

This post will cover the questions:

10. Do you ever have unanswered alerts in your monitoring environment?

11. How often does something go wrong that IS NOT detected by your monitoring?

12. Do you use a configuration management tool like Chef,

Puppet, or Ansible to manage your monitoring infrastructure?

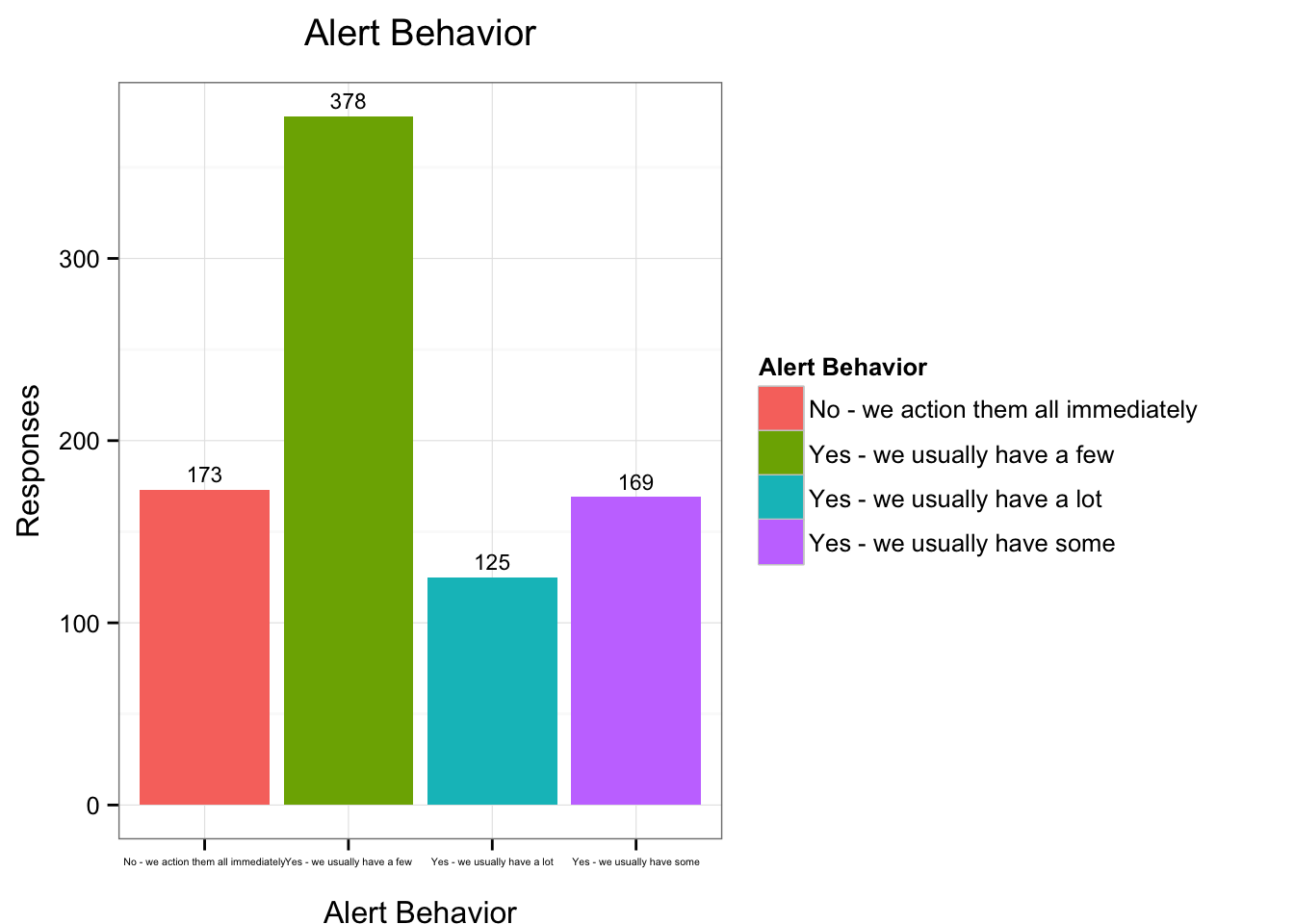

Do you ever have unanswered alerts in your monitoring environment?

In Question 10 we’re interested in the measurement of alerting hygiene and how people respond to alerts. I was interested in seeing how many people had outstanding alerts and how many actioned them immediately.

Each respondent had the option to answer the question with:

- No - we action them all immediately

- Yes - we usually have a few

- Yes - we usually have some

- Yes - we usually have a lot

I’ve provided a graph showing the distribution of answers.

We can see that the largest group of respondents, 378 or 45%, have at least a few unanswered alerts. The next largest group at 173 or 20.4% of respondents actions all alerts immediately. There’s a slightly smaller group, 169 or 19%, usually have some alerts that are not actioned. The last group, 125 or 14.5% of respondents, indicated that they have a lot of alerts that are not actioned.

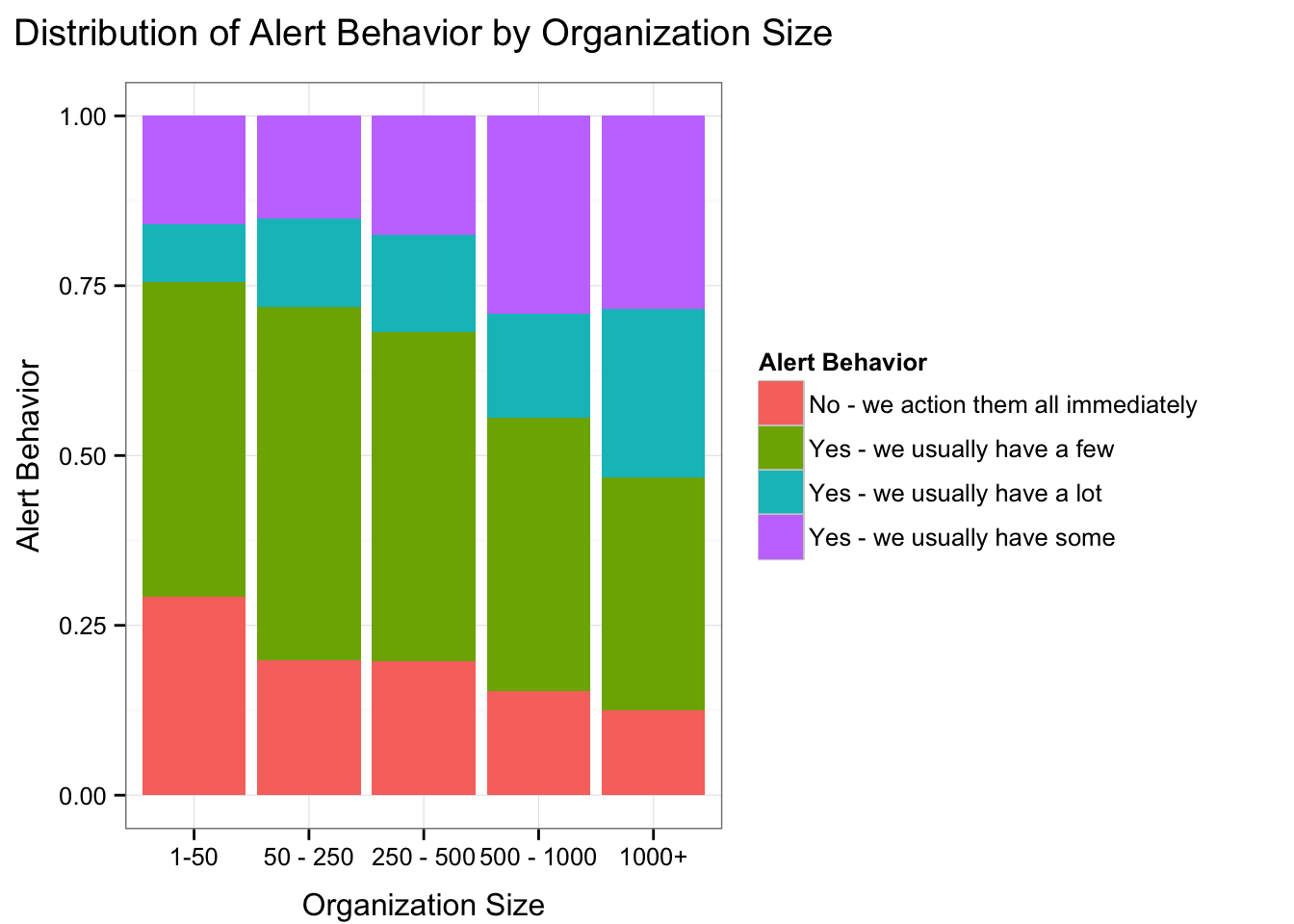

I also broke down alert behavior by organization size.

The patterns in this breakdown felt very familiar: the decrease in alerts being actioned immediately as the organization grows and the increase in volume of alerts that are not actioned.

Overall the results reflects anecdotal discussion about alert handling. In the next survey I want to look at whether there’s is a way to ask a question about alert fatigue and how to measure it.

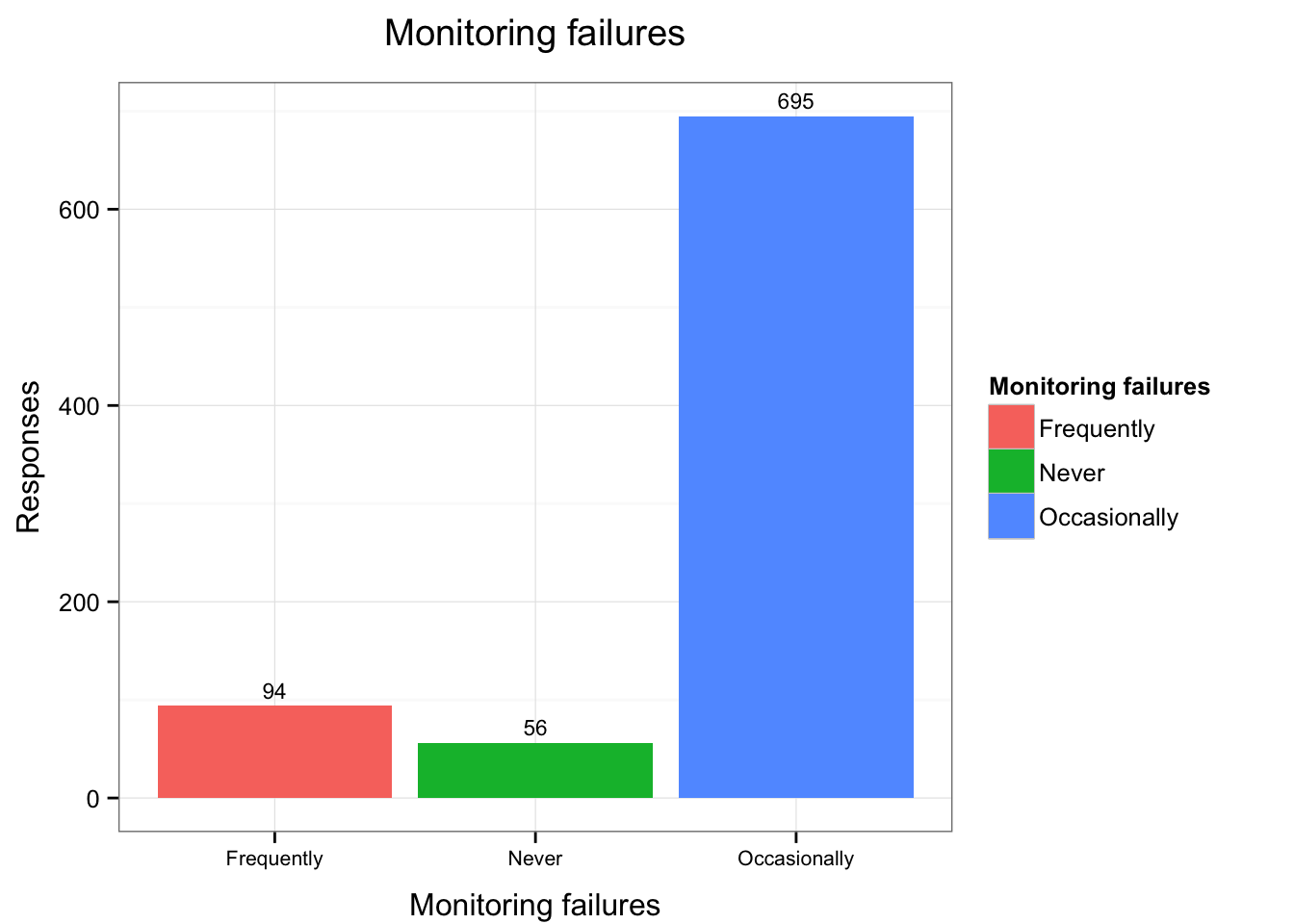

How often does something go wrong that IS NOT detected by your monitoring?

Question 11 asked about outages and failures in environments that are NOT detected via monitoring. The respondents had the option of answering:

- Frequently

- Occasionally

- Never

I’ve graphed the responses here:

We can see that 82% of respondents had something occasionally go wrong that wasn’t detected by monitoring. A further 94 respondents or 11% stated that failures frequently occurred that were not detected by monitoring. 56 respondents or 6% boldly claimed there were never undetected failures in their environments.

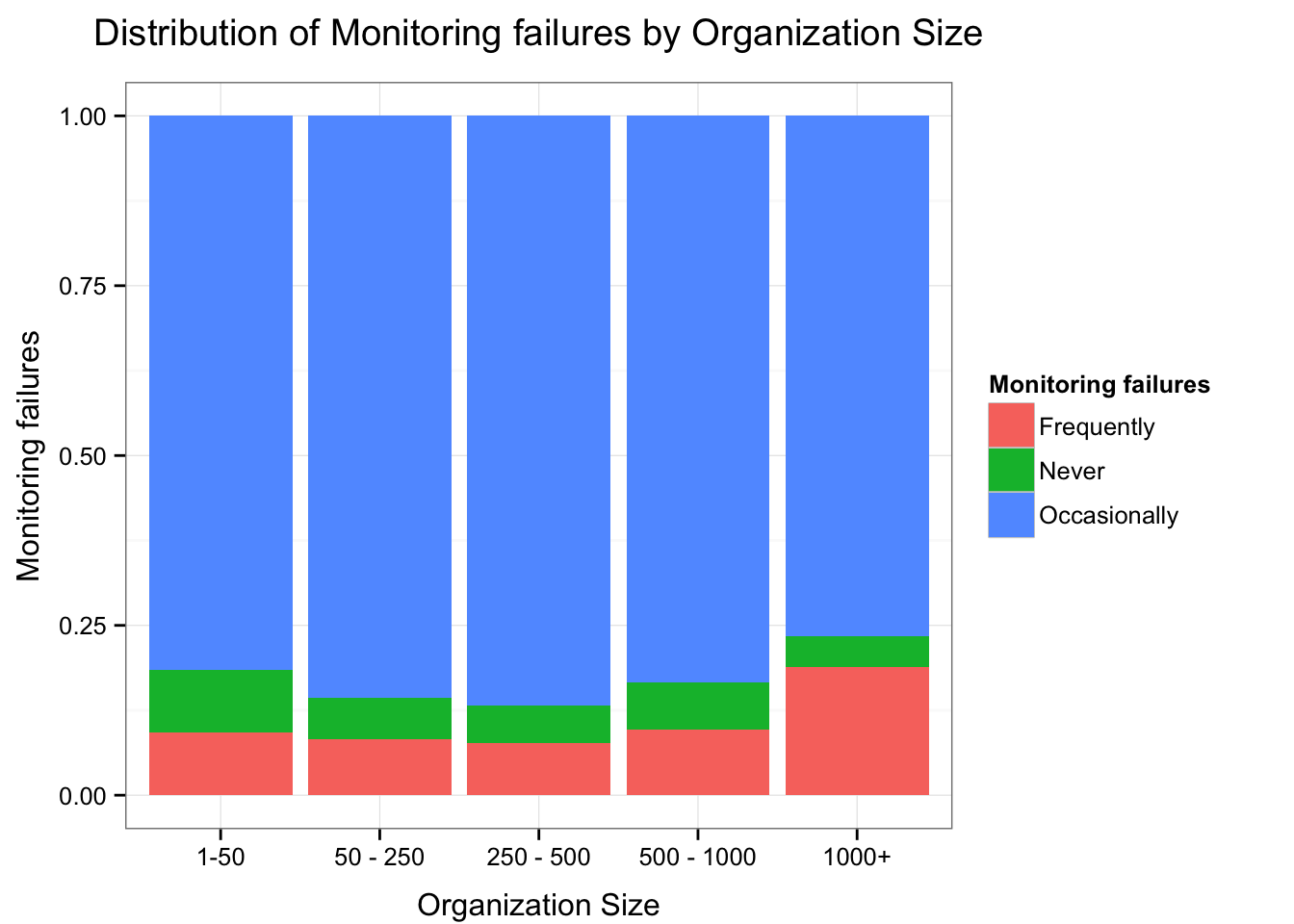

I further analyzed the response by organization size.

Again we see some familiar patterns with more frequent unmonitored failures in larger organizations.

Do you use a configuration management tool

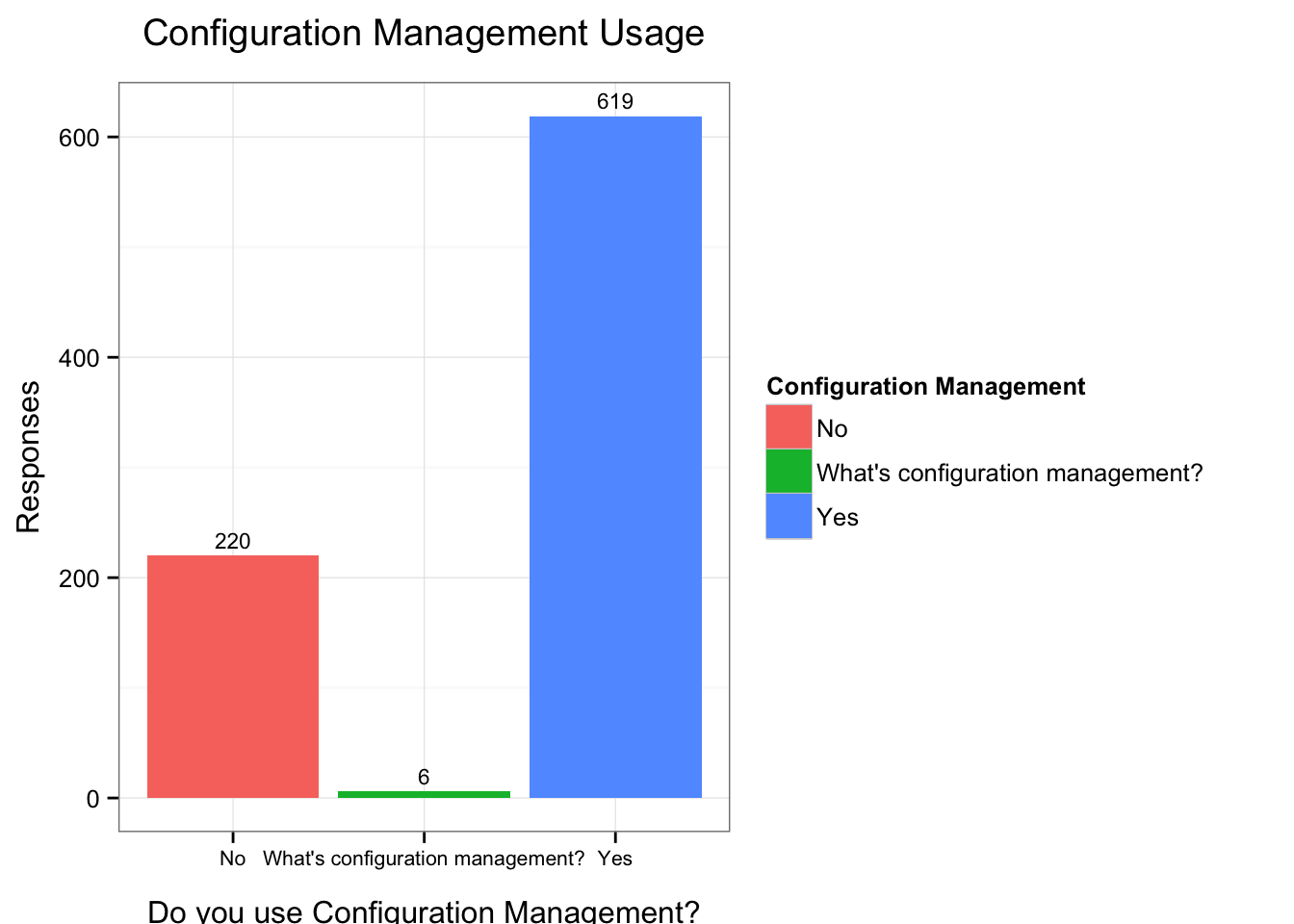

The last question, Question 12, asked respondents if they used Configuration Management to manage their monitoring environment.

619 or 73% of respondents did use Configuration Management to manage their monitoring which is more substantial than I expected but likely in keeping with the potential selection bias. 220 respondents or 26% did not use configuration management and 6 respondents, 0.7%, did not know what configuration management was.

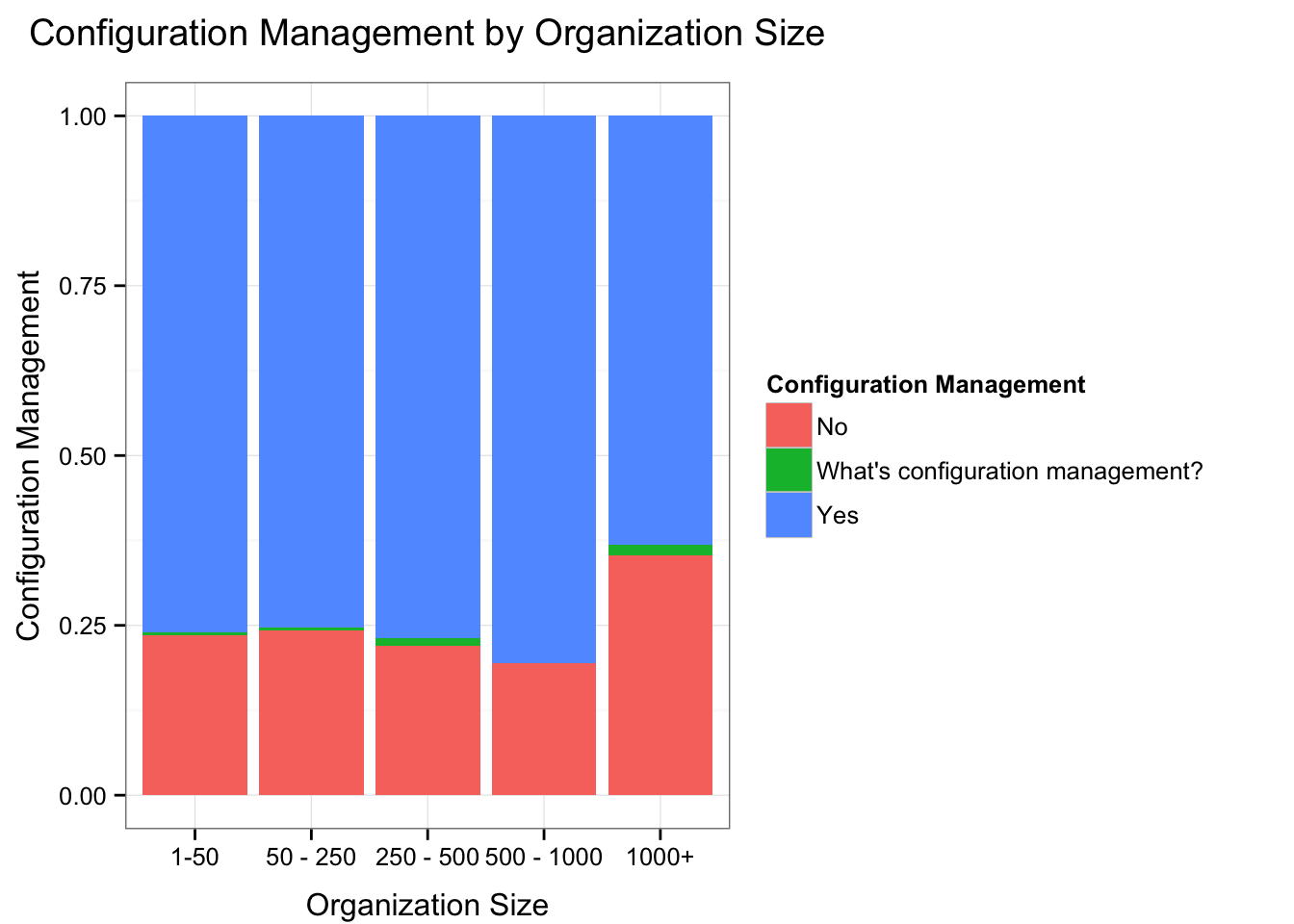

I also analyzed the responses by organization size.

The results here also fit with my anecdotal with a substantially smaller pool of larger organizations using configuration management to manage monitoring.